No one comes to work with the intention of doing a bad job. Even the least motivated of employees will report that they would not want to be associated with poor performance. So as leaders, it is up to us to help our team to meet or exceed their expectation. One of the ways we can do this, is by better understanding why people sometimes fail to meet expectation even when they are trying to attain high performance. The science of analysing mistakes and violations is fascinating and by getting your head around it, you can help your team reach higher levels of performance, consistently.

No one comes to work with the intention of doing a bad job. Even the least motivated of employees will report that they would not want to be associated with poor performance. So as leaders, it is up to us to help our team to meet or exceed their expectation. One of the ways we can do this, is by better understanding why people sometimes fail to meet expectation even when they are trying to attain high performance. The science of analysing mistakes and violations is fascinating and by getting your head around it, you can help your team reach higher levels of performance, consistently.

Your triggers for analysis are accidents or near misses and quality issues. Safety incidents and losses of production quality are very often the result of human factors. By understanding why people make the mistakes they make we can better understand their behaviour and better influence their behaviour to help them avoid such mistakes in the future. By applying such learning we can also ensure that others do not make the same mistake.

The use of bahavioural science in accident investigation has been a feature of some industry sectors for almost 3 decades, however, others have yet to embrace this. You don’t have to be a psychologist to explore human factors. In fact, all you have to do is question. When you get an answer, just ask why.

If your business is moving large numbers of people around using aeroplanes, you will have a good handle on risk and consequence. Human errors and equipment breakdowns that could be quite minor on the ground, can have catastrophic consequences up in the air. As such, it is no surprise that it was this industry who really led the way in applying human factors to incident investigation. If you work in manufacturing, process industry or engineering, the following case will probably contain some familiar attitudes and behaviours.

June 10, 1990: Miracle in the skies on BA Flight 5390 as captain is sucked out of the cockpit – and survives.

You may recall a high profile event in 1990 where an Airline Captain was sucked out of a British Airways plane at high altitude when the windscreen blew out. Thankfully and amazingly he survived the ordeal thanks to his crew mates who took speedy and decisive action to get the plane down and keep hold of the captain. The investigation centred on discovering why the windscreen came out at altitude. They soon discovered that the wrong bolts had been used to install the windscreen, which had just been fitted immediately before this flight. The independent investigator was struck by the honesty of the maintenance engineer who fitted the windscreen, he made no attempt to hide his actions. The investigator got some behavioural scientists involved, the first time this had been done in an engineering investigation. By using techniques to set the engineer at ease and gentle questioning around the events, they were able to get to the root cause of the incident. Here are some of the remarks and behaviours of the maintenance engineer, see how many you’ve seen before:

You may recall a high profile event in 1990 where an Airline Captain was sucked out of a British Airways plane at high altitude when the windscreen blew out. Thankfully and amazingly he survived the ordeal thanks to his crew mates who took speedy and decisive action to get the plane down and keep hold of the captain. The investigation centred on discovering why the windscreen came out at altitude. They soon discovered that the wrong bolts had been used to install the windscreen, which had just been fitted immediately before this flight. The independent investigator was struck by the honesty of the maintenance engineer who fitted the windscreen, he made no attempt to hide his actions. The investigator got some behavioural scientists involved, the first time this had been done in an engineering investigation. By using techniques to set the engineer at ease and gentle questioning around the events, they were able to get to the root cause of the incident. Here are some of the remarks and behaviours of the maintenance engineer, see how many you’ve seen before:

- He was working on his own and had more jobs than he thought were possible in the time allowed. However, he proceeded to work through them.

- The aeroplane location in the hanger was such that access to the windscreen was very difficult. He persevered using steps and leaning across to gain access.

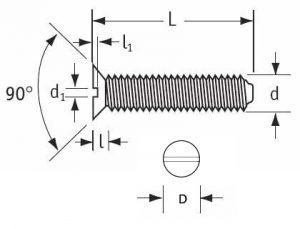

- He removed the bolts that were fitted, he noticed they were too long. He then went to find replacement bolts by comparing the removed bolts to those in stock.

- He didn’t use the computer to check which bolts should be used as this would take too long.

- Instead, he got into his car and drove to the other side of the site because he couldn’t find what he needed in the local store.

- When he entered the stores and told the store keeper that he wanted some 7D bolts for the windscreen, the store keeper told him that the BAC One-eleven used 8D bolts. He ignored the storekeeper.

- When asked why he ignored the store keeper, he said I’m an engineer and he isn’t.

- He signed the job off and completed all the required paperwork diligently.

Here we see a man, trying to do the best job he possibly can. He chose to replace the bolts instead of re-using the old ones. He took the effort to drive across the site to find the right parts. He worked hard all night to complete what he felt was an unrealistic workload. However, his decision to not use the computer to check the correct materials required resulted in a significant incident. Even when the storekeeper pointed out he was about to make an error, he continued. His decisions were based on some perceptions that he held, perceptions that had until now not been challenged. He believed that using the computer was time consuming. However, it couldn’t possibly have taken longer than driving to the other side of the site. Even though he knew the bolts he removed had been incorrect, he went looking for the same diameter bolts in the store. Because he is an engineer, he assumed that he knew better than the store man, even though several facts were pointing towards the store man being right, which he turned out to be.

If the engineer had refused to do the job because of the plane location this would have flagged an issue to his boss. If he had said that he couldn’t complete the jobs in the time allowed, this would have flagged an issue. By working around these issues he allowed risks to go unchecked. If the engineer had reported to his boss that he doesn’t use the computer because it takes too much time, he could have received additional training or the software could have been improved.

Each of these behaviours introduced significant risk to the business, however all were reported by the engineer as examples of him being conscientious and doing his best to get the job done. Can you envisage a similar set of circumstances might exist in your organisation?

If an investigation of human factors had not been completed, these risks would have continued. These potential dangerous behaviours and practises had been in place for a considerable amount of time. If the investigation had just found the engineer at fault, disciplined him and moved on, then the accident would be as likely to happen again tomorrow as before the investigation.

By analysing the human factors and asking why decisions were made, the airline and maintenance company were able to implement new processes and checks that prevent these events occurring. By ensuring that sufficient time is allowed for maintenance, ensuring that part number checks are performed, ensuring that access is always suitable and sufficient and ensuring that sign-off is performed by someone else who witnesses the finished job this and many other failures can been prevented.

All BA aeroplane windscreens are now fitted from the inside, so the positive pressure of the cabin provides additional force to hold them in. This engineering solution has prevented the exact incident from re-occurring. The investigation into human factors has prevented countless other unforeseen incidents from occurring.

Also, by analysing the human factors involved, the engineer who always set out to do a good job has become better placed to meet his own expectation, along with his colleagues.

Check out HSG48 to learn more about human factors. If it doesn’t already, consider adding a human factors investigation component to your procedure for investigating incidents. Don’t limit yourself to accident investigation, the same learning applies to engineering and quality failures too.

Check out HSG48 to learn more about human factors. If it doesn’t already, consider adding a human factors investigation component to your procedure for investigating incidents. Don’t limit yourself to accident investigation, the same learning applies to engineering and quality failures too.

http://www.hse.gov.uk/pUbns/priced/hsg48.pdf