To err is human.

On the 6th June 1992, Copa flight 201 ended in the worst air disaster in Panama’s history. The flight bound for Cali, Columbia came down in the jungle killing everyone on board. Initial thoughts centred on the possibility of a collision with a drug running aircraft or a bomb on board. The area is home to organised crime heavily funded by drug running. Private planes are often used by drug runners, who turn off their transponders to avoid detection and have no communication with air traffic control. As such it is impossible to track the location of such planes and collisions are difficult to predict and therefore avoid. However investigation teams found no other wreckage from such a plane and no evidence of a collision. The location of the major plane components in the jungle, also indicated that no mid air explosion had occurred. So why did the Boeing 737 come down? It was important for the investigators to find the cause as quickly as possible, as the 737 was the most popular passenger aircraft in service. If there was a design flaw that led to the crash, the airline community needed to know.

In addition to the likelihood of sabotage or collision with an unauthorised aircraft, the investigators had several other red herrings to deal with. The Captain had requested a revised route due to very bad weather conditions, eye witnesses had reported hearing a large explosion and seeing a huge ball of flames in the sky. All theories were investigated but did not turn out to be the cause of the accident. The investigation team were really up against it, with multiple ‘obvious’ theories being offered and almost impassable terrain to deal with, the public and Panama government wanted answers. On the first day of the investigation one team member had a cardiac arrest and eight snake bites were recorded. This was not an easy investigation. The flight data recorder and cockpit voice recorder were eventually found. With no survivors, these are critical to working out what happened in the final minutes of the flight.

The tenacity of the investigator cannot bring back the lives that are lost, but can save countless lives in the future.

The voice recorder was found to be broken with the tape unspooled inside. After painstaking reconstruction of the tape they were able to listen to the recording. However, the recording on the tape was that from a flight days earlier. It appeared that the recorder had broken in normal service and had not been repaired. This is almost unheard of. It appeared as though the investigation team were being thwarted at every step.

The flight data recorder, however, held some answers. It indicated that the plane went through some dramatic banking and rolls before going into a final dive. In an attempt to determine the cause of such erratic flight, the team tested the control surfaces and all elements that could have caused the pilots to lose control. They found no evidence of defects. When they moved to test the instruments that provide critical position indications to the pilots, they found a fault.

The plane is fitted with vertical gyroscopes, VGs which detect the position of the craft in the air. They detect if the nose is tilted up, down or level. They also detect if the plane is banking left or right. There are three of them because this indication is probably the most critical one the pilots have. If you can’t see the horizon or land, the gyroscope is the only instrument that tells you the attitude of the plane. The indication is presented to the pilots in three separate Attitude Director Indicators, ADIs. So on the 737 you have three independent gyroscopes providing information to three independent ADIs. This way, if ever an instrument is in doubt, you can cross check with the others.

Investig ators found one ADI was faulty, it had an intermittent cable fault between the VG and ADI which caused it to stick and show a constant reading even when the gyroscope was detecting movement. If a pilot had been relying on this indicator, they would have soon performed incorrect control inputs with the potential to lose control of the plane. So a probable cause had been found. However, with three independent indicators in the cockpit, how could a defect in one cause an incident? Furthermore, if an instrument is reading differently to the others, this is highlighted to the pilot so they can take action.

ators found one ADI was faulty, it had an intermittent cable fault between the VG and ADI which caused it to stick and show a constant reading even when the gyroscope was detecting movement. If a pilot had been relying on this indicator, they would have soon performed incorrect control inputs with the potential to lose control of the plane. So a probable cause had been found. However, with three independent indicators in the cockpit, how could a defect in one cause an incident? Furthermore, if an instrument is reading differently to the others, this is highlighted to the pilot so they can take action.

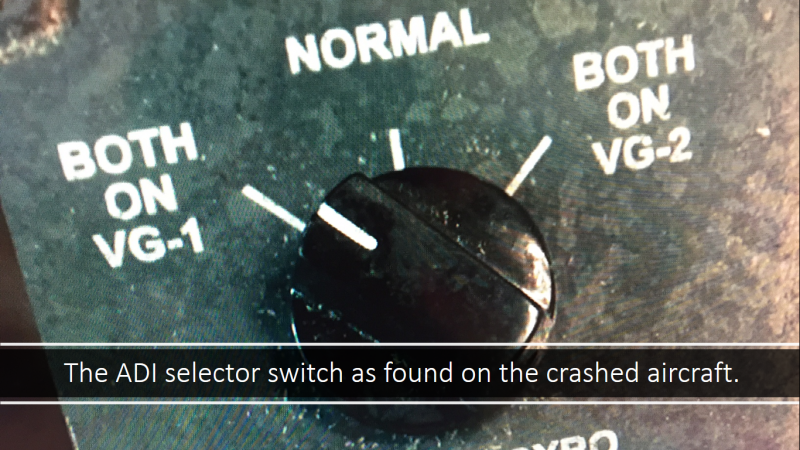

The three ADIs are located as follows, one in front of the captain, one in front of the first officer, one in between the two pilots (the auxiliary). In normal conditions each is fed from its own vertical gyroscope. The crew can adjust which gyroscope feeds which indicator. This allows theme to remove erroneous indications if there is a known fault. On flight 201, the investigation team found the selector switch was positioned to feed both the captain’s and first officer’s indicators from the defective vertical gyroscope. This meant that they both had matching incorrect indications.

The flight data recorder records whatever indications are presented to the captain. So now the team know that the movements of the plane they reviewed are not correct. When they plotted the actual movements of the plane using the correct indicator data, the answer to the mystery unfolded. The erratic movements of the plane exactly mirrored the ADI data. So when the ADI indicated a bank to the left, the plane went right to correct this. Because the indicator was stuck, the corrective inputs appeared to the pilots to have no effect. So further inputs were given which eventually put the plane into an inverted position. Unable to fly in this position, the plane went into a dive. Recovering from a dive with a non-responsive ADI is an impossible task for the pilots. The dive was so severe that the plane exceeded the speed of sound and started to break up in the air. Ultimately the plane hit the ground, killing what passengers remained on board.

What is obvious to the engineer at a desk, is not always so obvious to an operator managing an issue.

So why would an experienced captain select for both ADIs to read from the defective gyroscope? The investigation team found that the selector switch had clear labels which even a lay person could have understood. You could choose from normal, both on VG1 or both on VG2. VG1, the captain’s vertical gyroscope had failed. The switch was selected to both on VG1. This was, critically, the left hand option.

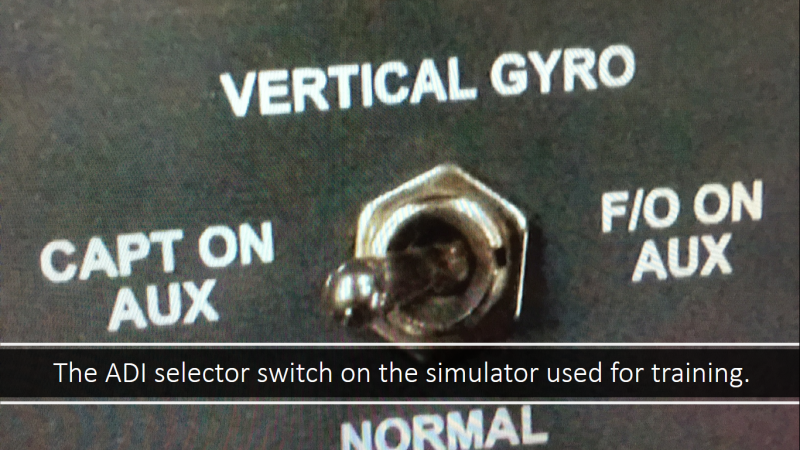

F ollowing a typical investigative protocol, the team looked to the training for the captain and the first officer. The captain had been trained in a 737 simulator with a different selector switch. On the simulator switch, turning left would select the auxiliary vertical gyroscope. This would have fixed the fault and allowed normal flight. However turning the switch to the left on this 737 meant neither pilot had a valid attitude reading.

ollowing a typical investigative protocol, the team looked to the training for the captain and the first officer. The captain had been trained in a 737 simulator with a different selector switch. On the simulator switch, turning left would select the auxiliary vertical gyroscope. This would have fixed the fault and allowed normal flight. However turning the switch to the left on this 737 meant neither pilot had a valid attitude reading.

This is a classic example of a rule based error. It is not clear which pilot activated the switch, however it appears that they learned to turn the selector switch to the left if you got at an error on the Captain’s ADI. In the simulator this would always have fixed the problem. If the Captain’s ADI failed, switching to the auxiliary one would always sort the issue. The learned rule was correctly applied, but in this instance it was the incorrect thing to do.

I have been involved in many investigations. I have seen such errors fairly frequently. Often you hear the engineers comment that the right behaviour was obvious. The switch was clearly marked, the equipment ID comes up on the screen, it’s obvious which breaker to open. These are all typical comments that might be made by the designer or the engineer responsible for the equipment. What is obvious to the engineer at a desk, is not always so obvious to an operator managing an issue. Especially if you are multitasking several issues at once. Always place yourself in the position of that operator.

We learn from former consequences, what worked in the past will work in the future. Imagine if I took your car and fitted a new indicator switch on the steering column. It looks and feels like the old one but the selections are the other way round. What was left is now right and vice-versa. On the stalk is a clear indication of which action operates which indicator. However, I hand your car back with no mention of this change. Would it be possible that at the first junction you approach you make an incorrect indication? Furthermore, would it be possible that you fail to notice this error?

We learn from former consequences, what worked in the past will work in the future. Imagine if I took your car and fitted a new indicator switch on the steering column. It looks and feels like the old one but the selections are the other way round. What was left is now right and vice-versa. On the stalk is a clear indication of which action operates which indicator. However, I hand your car back with no mention of this change. Would it be possible that at the first junction you approach you make an incorrect indication? Furthermore, would it be possible that you fail to notice this error?

Incident investigation and subsequent corrective action should focus on human behaviour. Identifying human factors as a cause is far from the end of the process. Analysing the type of error or violation and then understanding why this happened is the key to designing out the issue in the future.

We try and influence human behaviour in many ways. However, a better understanding of human behaviour can mean we do not set people up to fail. Rather than training people to check for changes every time they make an operation, in some cases it is better to design the equipment with human behaviour in mind in the first place. Where this can’t be done, thorough education and reinforcement is required to avoid such rule based errors.

Following the crash of flight 201, Copa did not tell their pilots to check the wording on all switches before using them, they standardised their cockpits so every switch operated the same way.

Managing human behaviour is critical to obtain compliance, understanding human behaviour is critical to avoid errors.